在国内,由于网络限制,无法访问一些内置的免费搜索插件。安装 searXNG 本地服务端后,按照教程中的连接方式,始终无法成功连接;采用 docker 方案,也因国内网络环境无法使用。因此,需要在本地编写一个基于 Python 的 Flask 服务程序,利用爬虫技术来提供联网搜索数据。

代码 1:

#!/usr/bin/python3

# _*_ coding: utf-8 _*_

#

# Copyright (C) 2025 - 2025

# @Title : 这是一个模拟searXNG服务器的程序实现本地搜索

# @Time : 2025/2/18

# @Author : gdcool

# @File : search-api.py

# @IDE : PyCharm

import requests

import random

import json

from bs4 import BeautifulSoup

from baidusearch.baidusearch import search as b_search

from urllib.parse import urlparse

from flask import Flask, request, jsonify

def is_valid_url(url):

"""检查URL是否是符合标准的完整URL"""

parsed_url = urlparse(url)

return parsed_url.scheme in ['http', 'https'] and parsed_url.netloc

def search_api(keyword, num_results):

"""上网搜索"""

search_results = b_search(keyword, num_results)

results = []

res_id = 0

# 生成一个0到999的随机数

sj_num = random.randint(0, 999)

for extracted_result in search_results:

res_title = extracted_result['title'].replace('\n', '')

res_abstract = extracted_result['abstract'].replace('\n', '')

res_url = extracted_result['url']

use_text = False

if is_valid_url(res_url):

# 自增长id

res_id = res_id + 1

# use_text是一个是否搜索url内部数据并替换给res_abstract提供更多简介参考数据(不太准确)

if use_text:

try:

# 请求头 使用一个随机数和一个自增长数,欺骗搜索引擎防止被屏蔽并发搜索,但是任然只允许8个并发。未修改时只允许6个可用并发。

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/132.0.%d.%d Safari/537.36'

% (sj_num, res_id)

}

# 发送HTTP GET请求到URL

response = requests.get(res_url, headers=headers)

# 检查请求是否成功

if response.status_code == 200:

# 打印页面内容的前几百个字符(避免打印过长内容)

# 使用BeautifulSoup解析HTML内容

soup = BeautifulSoup(response.content, 'html.parser')

# 提取网页中的纯文本内容并删除掉多余回车和制表符

text = soup.get_text().replace('\n', '')

text = text.replace('\r', '')

text = text.replace('\t', '')

res_abstract = text[:600]

else:

# print(f"无法访问URL,状态码: {response.status_code}")

pass

except requests.RequestException as e:

# 处理请求异常,如网络问题、超时等

# print(f"请求URL时发生错误: {e}")

pass

# 处理百度连接重定向真实连接

try:

# 请求头

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/132.0.%d.%d Safari/537.36'

% (sj_num, res_id)

}

res = requests.head(res_url, allow_redirects=True, headers=headers)

res_url = res.url

except requests.RequestException as e:

print(f"Error fetching URL {res_url}: {e}")

tmp_json = {

"title": res_title,

"search_id": res_id,

"content": res_abstract,

"url": res_url,

"engine": "baidu",

"category": "general",

}

results.append(tmp_json)

return results

app = Flask(__name__)

@app.route('/search', methods=['GET'])

def search():

query = request.args.get('q', '')

nums = request.args.get('num_results', 10)

if not query:

return jsonify({"error": "No query provided"}), 400

try:

nums = int(nums)

except ValueError:

return jsonify({"error": "Invalid number of results"}), 400

results = search_api(query, nums)

return jsonify({

"query": query,

"results": results

})

if __name__ == "__main__":

app.run(host='0.0.0.0', port=5000)百度搜索页面广告多且有并发屏蔽。相比之下,搜索 CSDN 时的数据纯净很多,搜索质量也远高于百度。下面是搜索csdn版的

代码 2:

#!/usr/bin/python3

# _*_ coding: utf-8 _*_

#

# Copyright (C) 2025 - 2025

#

# @Time : 2025/2/19

# @Author : gdcool

# @File : csdn_search.py

# @IDE : PyCharm

import requests

import random

import json

from bs4 import BeautifulSoup

from urllib.parse import urlparse

from flask import Flask, request, jsonify

def csdn_search(keyword):

url = f'https://so.csdn.net/api/v3/search?q={keyword}'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/132.0.0.0 Safari/537.36',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Referer': 'https://so.csdn.net/so/search',

'Accept': 'application/json, text/plain, */*',

'Accept-Encoding': 'gzip, deflate, br',

'Connection': 'keep-alive'

}

try:

response = requests.get(url, headers=headers)

response.raise_for_status()

# 假设 response.text 是你的 JSON 数据

json_data = response.text

# 解析 JSON 数据

data = json.loads(json_data) # 假设 response.text 是你的 JSON 数据

json_data = response.text

# 解析 JSON 数据

data = json.loads(json_data)

# 提取 result_vos 列表

result_vos = data.get('result_vos', [])

results = []

search_id = 0

# 遍历 result_vos 列表并提取需要的字段

for result in result_vos:

title = result.get('title')

content = result.get('body')

url = result.get('url')

results.append({

'title': title,

'content': content,

'url': url,

'search_id': search_id,

"engine": "csdn",

"category": "general",

})

search_id += 1

except requests.RequestException as e:

return json.dumps({'error': str(e)}, ensure_ascii=False)

return results

app = Flask(__name__)

@app.route('/search', methods=['GET'])

def search():

query = request.args.get('q', '')

if not query:

return jsonify({"error": "No query provided"}), 400

results = csdn_search(query)

return jsonify({

"query": query,

"results": results

})

if __name__ == "__main__":

app.run(host='0.0.0.0', port=5000)将上面的代码保存到一个py文件中(如:app.py) ,然后运行执行命令:python3 app.py

执行python3 app.py可能会提示没有模块的错误,安装相关的python模块即可(如:pip install beautifulsoup4)

#使用 nohup 命令后台运行的程序会在你关闭终端后继续运行(ctrl+c不会终止)

nohup python3 app.py &

#终止

ps aux | grep app.py #查找正在运行的进程 ID

kill PID #如果 kill 命令无法终止进程,可以尝试使用 kill -9 PID 强制终止进程

或者

pkill -f app.py #按名称终止进程

# killall python3 命令终止所有正在运行的 Python3 进程

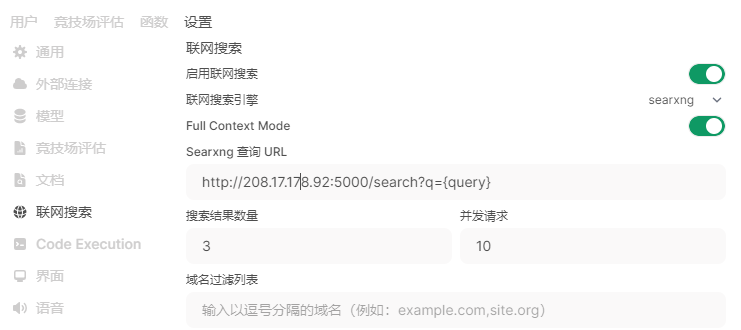

open-webui设置

open-webui 联网设置 引擎选择searXNG 查询url设置成 (http://127.0.0.1:5000/search?q=<query>)

过滤掉download.csdn.net

其它

代码3(必应):

from flask import Flask, request, jsonify

import requests

from bs4 import BeautifulSoup

app = Flask(__name__)

def bing_search(query):

# Bing 搜索URL

search_url = "https://cn.bing.com/search"

# 发送请求到Bing搜索

headers = {

'User-Agent': 'Mozilla/5.0'

}

params = {'q': query}

response = requests.get(search_url, headers=headers, params=params)

soup = BeautifulSoup(response.text, 'html.parser')

# 解析搜索结果 - 这里仅作为示例,具体选择器可能需要根据实际情况调整

results = []

for item in soup.select('.b_algo'):

title = item.h2.text

url = item.h2.a['href']

content = item.find('p').text

results.append({

"title": title,

"url": url,

"content": content,

"category": "",

"engine": "Bing",

"search_id": ""

})

return {"query": query, "results": results}

@app.route('/search', methods=['GET'])

def search():

keyword = request.args.get('q')

if not keyword:

return jsonify({"error": "Missing query parameter"}), 400

result = bing_search(keyword)

return jsonify(result)

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000)代码4(搜狗):

# 导入必要的库

from flask import Flask, request, jsonify # Flask框架的核心库

import requests # 用于发送HTTP请求

from bs4 import BeautifulSoup # 用于解析HTML文档

app = Flask(__name__)

def resolve_url(url):

"""

解析并返回最终的URL(处理重定向)

:param url: 初始URL

:return: 最终的URL

"""

try:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3'

}

response = requests.head(url, allow_redirects=True, headers=headers)

return response.url

except requests.RequestException as e:

print(f"Error fetching URL {url}: {e}")

return url

@app.route('/search', methods=['GET'])

def search():

"""

处理/search路径下的GET请求。

提取用户查询的关键词,通过搜狗搜索引擎获取结果,并以JSON格式返回。

"""

# 获取URL参数q的值作为搜索关键词

keyword = request.args.get('q')

if not keyword:

return jsonify({"error": "No query provided"}), 400 # 如果没有提供关键词,则返回错误信息

# 设置请求头,伪装成浏览器访问

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3'}

# 发送GET请求到搜狗搜索

response = requests.get(f"https://www.sogou.com/web?query={keyword}", headers=headers)

soup = BeautifulSoup(response.text, 'html.parser') # 解析返回的HTML文档

results = [] # 存储搜索结果的列表

# 遍历搜索结果项(根据实际网页结构调整选择器)

for div in soup.find_all("div", class_="vrwrap"): # 这里的class可能需要根据实际情况调整

try:

# 获取标题、链接和摘要等信息

title = div.find("h3").get_text()

initial_url = div.find("a")['href']

content = div.find("p").get_text() if div.find("p") else "" # 摘要可能不存在

# 检查URL是否缺少协议部分

if not (initial_url.startswith("http://") or initial_url.startswith("https://")):

# 假设搜狗使用的跳转链接格式为:http://www.sogou.com/link?url=ENnXjLJwKQZGg...

# 我们可以尝试直接访问这个链接来获取最终的URL

final_url = resolve_url(f"http://www.sogou.com{initial_url}")

else:

final_url = resolve_url(initial_url)

# 将信息添加到结果列表中

results.append({

"title": title,

"url": final_url,

"content": content,

"category": "", # 可选字段,这里留空

"engine": "sogou",

"search_id": "" # 可选字段,这里留空

})

except Exception as e:

# 如果在提取过程中出现异常,跳过该项继续处理下一个

print(f"Error processing result: {e}")

continue

# 返回包含查询关键词和结果的JSON对象

return jsonify({

"query": keyword,

"results": results

})

if __name__ == '__main__':

# 在本地运行服务器,监听566端口

app.run(host='0.0.0.0', port=5000)代码5(整合百度、CSDN、必应、搜狗):

下面是整合后的代码,每个搜索引擎将获取前6条搜索结果

from flask import Flask, request, jsonify

import asyncio

import aiohttp

from bs4 import BeautifulSoup

from urllib.parse import urljoin

app = Flask(__name__)

async def fetch(session, url, headers):

"""

使用aiohttp进行异步请求

:param session: aiohttp.ClientSession对象

:param url: 请求的URL

:param headers: 请求头

:return: 响应文本

"""

try:

async with session.get(url, headers=headers, timeout=10) as response:

return await response.text()

except Exception as e:

print(f"Error fetching {url}: {e}")

return None

async def resolve_url(base_url, url):

"""

解析并返回最终的URL(处理重定向和相对路径)

:param base_url: 基础URL

:param url: 初始URL

:return: 最终的URL

"""

if not url.startswith('http'):

url = urljoin(base_url, url)

try:

async with aiohttp.ClientSession() as session:

async with session.head(url, allow_redirects=True, timeout=10) as response:

return str(response.url)

except Exception as e:

print(f"Error resolving URL {url}: {e}")

return url

async def search_baidu(keyword, limit=6):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3'}

url = f"https://www.baidu.com/s?wd={keyword}"

async with aiohttp.ClientSession() as session:

html = await fetch(session, url, headers)

if not html:

return []

soup = BeautifulSoup(html, 'html.parser')

results = []

count = 0

for div in soup.find_all("div", class_="result"):

if count >= limit:

break

try:

title_tag = div.h3.a

title = title_tag.get_text() if title_tag else "No Title"

initial_url = title_tag['href'] if title_tag and 'href' in title_tag.attrs else ""

content_tag = div.find("div", class_="c-abstract")

content = content_tag.get_text() if content_tag else "No Description"

final_url = await resolve_url('https://www.baidu.com', initial_url)

results.append({

"title": title,

"url": final_url,

"content": content,

"category": "",

"engine": "baidu",

"search_id": ""

})

count += 1

except Exception as e:

print(f"Error processing Baidu result: {e}")

continue

return results

async def search_csdn(keyword, limit=6):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3'}

url = f"https://so.csdn.net/so/search/s.do?q={keyword}"

async with aiohttp.ClientSession() as session:

html = await fetch(session, url, headers)

if not html:

return []

soup = BeautifulSoup(html, 'html.parser')

results = []

count = 0

for li in soup.find_all("li", class_="blog-list-box"):

if count >= limit:

break

try:

title_tag = li.find("a", class_="blog-title")

title = title_tag.get_text().strip() if title_tag else "No Title"

initial_url = title_tag['href'] if title_tag and 'href' in title_tag.attrs else ""

content_tag = li.find("p", class_="text")

content = content_tag.get_text().strip() if content_tag else "No Description"

final_url = await resolve_url('https://so.csdn.net', initial_url)

results.append({

"title": title,

"url": final_url,

"content": content,

"category": "",

"engine": "csdn",

"search_id": ""

})

count += 1

except Exception as e:

print(f"Error processing CSDN result: {e}")

continue

return results

async def search_bing(keyword, limit=6):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3'}

url = f"https://cn.bing.com/search?q={keyword}"

async with aiohttp.ClientSession() as session:

html = await fetch(session, url, headers)

if not html:

return []

soup = BeautifulSoup(html, 'html.parser')

results = []

count = 0

for li in soup.find_all("li", class_="b_algo"):

if count >= limit:

break

try:

title_tag = li.h2

title = title_tag.get_text() if title_tag else "No Title"

initial_url = title_tag.a['href'] if title_tag and title_tag.a and 'href' in title_tag.a.attrs else ""

content_tag = li.find("p")

content = content_tag.get_text() if content_tag else "No Description"

final_url = await resolve_url('https://cn.bing.com', initial_url)

results.append({

"title": title,

"url": final_url,

"content": content,

"category": "",

"engine": "bing",

"search_id": ""

})

count += 1

except Exception as e:

print(f"Error processing Bing result: {e}")

continue

return results

async def search_sogou(keyword, limit=6):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3'}

url = f"https://www.sogou.com/web?query={keyword}"

async with aiohttp.ClientSession() as session:

html = await fetch(session, url, headers)

if not html:

return []

soup = BeautifulSoup(html, 'html.parser')

results = []

count = 0

for div in soup.find_all("div", class_="vrwrap"):

if count >= limit:

break

try:

title_tag = div.find("h3")

title = title_tag.get_text() if title_tag else "No Title"

url_tag = div.find("a")

initial_url = url_tag['href'] if url_tag and 'href' in url_tag.attrs else ""

content_tag = div.find("p")

content = content_tag.get_text() if content_tag else "No Description"

final_url = await resolve_url('https://www.sogou.com', initial_url)

results.append({

"title": title,

"url": final_url,

"content": content,

"category": "",

"engine": "sogou",

"search_id": ""

})

count += 1

except Exception as e:

print(f"Error processing Sogou result: {e}")

continue

return results

async def search_all_engines(keyword, limit=6):

"""

并发地从所有搜索引擎获取结果

:param keyword: 搜索关键词

:param limit: 返回的结果数量限制

:return: 结果列表

"""

tasks = [

search_baidu(keyword, limit),

search_csdn(keyword, limit),

search_bing(keyword, limit),

search_sogou(keyword, limit)

]

results = await asyncio.gather(*tasks, return_exceptions=True)

flat_results = [item for sublist in results if isinstance(sublist, list) for item in sublist]

return flat_results

@app.route('/search', methods=['GET'])

def search():

"""

处理搜索请求并汇总所有搜索引擎的结果

"""

keyword = request.args.get('q')

if not keyword:

return jsonify({"error": "No query provided"}), 400

loop = asyncio.new_event_loop()

asyncio.set_event_loop(loop)

results = loop.run_until_complete(search_all_engines(keyword))

loop.close()

if not results:

return jsonify({"query": keyword, "results": [], "message": "No results found"}), 404

return jsonify({

"query": keyword,

"results": results

})

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000)